I recently discovered this special edition of IEEE Proceedings dealing with Machine Ethics and AI in autonomous systems. (I actually landed on Katina Michael’s page first, which gives a nice overview.) I haven’t had a chance to explore deeply the IEEE Proceedings special issue yet, but I hope to set aside some time for that soon. Whenever I bring this topic up with my students, it always stirs up a great deal of interest and discussion. Thinking about it reminds me of a situation I once experienced related to autonomous systems in 1991.

During Operation Desert Storm, there was a friendly fire incident in which the battleship Missouri was struck by a Phalanx Close-In Weapon System or CIWS (pronounced sea-wiz) of the escort ship USS Jarrett. Over the years since, I have come to the realization that the Jarrett CIWS at that time was functioning as an autonomous robot, having a weapon system with its own sensors and computerized decision-making capability. I don’t know if this incident has ever been described as such, but it may very well be the very first time an autonomous weapon system accidentally opened fire on friendly forces during combat.

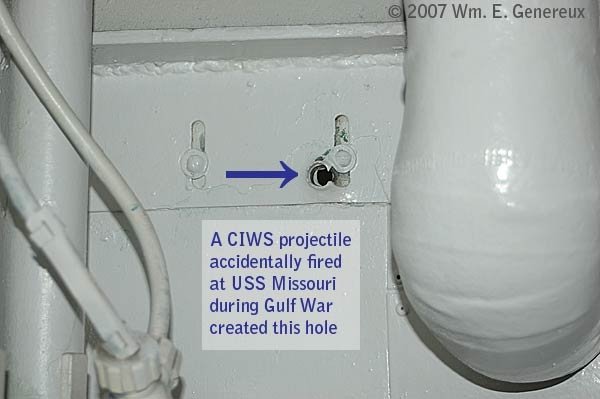

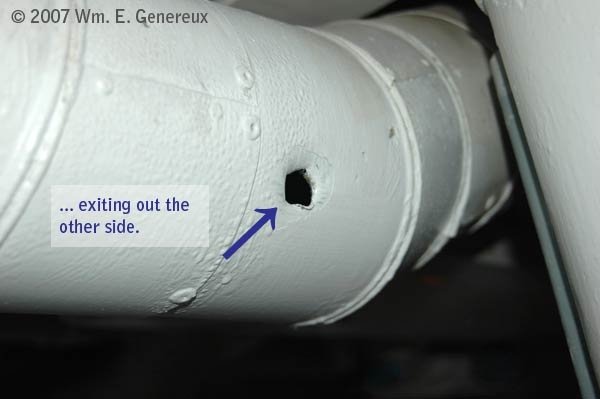

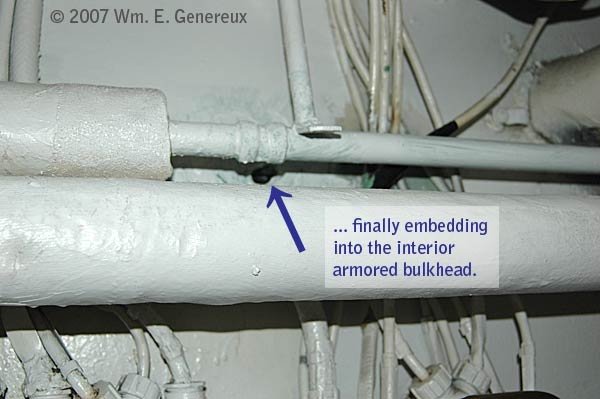

While conducting shore bombardment operations off the coast of Kuwait in February of 1991, Iraqi forces launched two Silkworm anti-ship missiles towards the Battleship Missouri and escort ships. The USS Missouri fired its SRBOC chaff as a missile counter-measure and the nearby USS Jarrett, with its CIWS operating in full-automatic (autonomous) mode sensed the Missouri’s airborne chaff canister, interpreted it as an inbound threat and fired some rounds of CIWS projectiles towards it. Some of these rounds struck the battleship and penetrated through several bulkheads of the superstructure stopping in the passageway just outside the captain’s cabin. I took some photos of the still-existing holes when I last visited the ship in 2007.

Fortunately, no one was seriously injured in the battleship incident, but undoubtedly at some point, there will be more unintended interactions between autonomous weapon systems and humans. It reminds me of this awful scene from the 1987 science fiction movie Robocop.

You can see the full Robocop film clip here (link contains the graphic violence of an R-rated film. Not suitable for children.)

There is really no way to anticipate every situation and consequence of adopting any new technology in advance of actually using it. Autonomous systems are interesting because by nature that is what they attempt to do; sense the present, predict the future, and act accordingly. Whenever humans build automated systems, there will always be conditions that lead to unintended consequences. There have already been a number of incidents involving auto-pilot systems of Tesla automobiles and Boeing aircraft.

We will continue to see these things happening as more and more systems become automated. We need to continue having conversations about how best to adopt and implement autonomous systems.

About the Author: Bill Genereux served as a fire-control technician aboard the battleship USS Missouri during Operation Desert Storm in 1991.